6.4 KiB

System Design

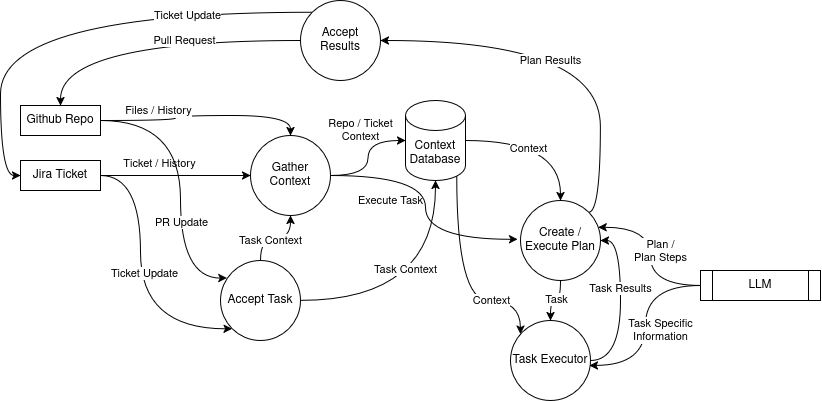

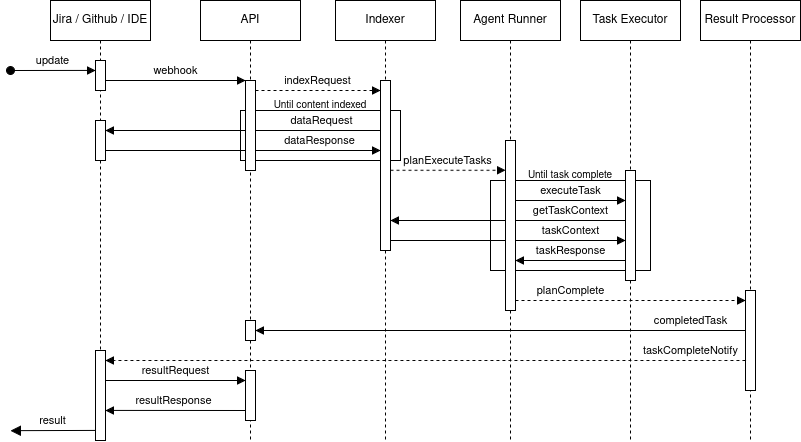

This document contains a systems design for a product capable of helping software engineers by accepting prompts from a variety of sources (Github, Jira, IDEs, etc) and autonomously retrieving context, creating a plan to solve the prompt, executing the plan, verifying the results, and then pushing the results back to the most appropriate tool.

At a very high level the system looks like this:

Components:

- API - A REST based API capable of accepting incoming webhooks from a variety of tools. The webhooks will generate tasks that will require context gathering, planning, execution, and testing to resolve. These get passed to the indexer to gather context.

- Indexer - An event based processor that accepts tasks from the API and gathers context (e.g. Git files / commits, Jira ticket status, etc) that will need to be indexed and stored for efficient retrieval by later stages.

- Agent Runner - Takes the task and associated context generated by the Indexer and generates an execution plan. Works synchronously with the task executor to execute the plan. As tasks are executed the plan should be adjusted to ensure the task is accomplished.

- Task Executor - A protected process running with gVisor (a container security orchestration

mechanism) that has a set of tools available to it that the agent runner can execute to perform its task. The executor

will have a Network Policy applied such that network access is restricted the bare minimum required to use the

tools.

Example tools include:- Code Context - Select appropriate context for code generators.

- Code Generators - Generate code for given tasks.

- Compilers - Ensure code compiles.

- Linters - Ensure code is well formatted and doesn't violate any defined coding standards.

- Run Tests - Ensure tests (unit, integration, system) continue to pass after making changes, make sure new tests pass.

- Result Processor - Responsible for receiving the result of a task from the Agent Runner and disseminating it to interested parties through the API and directly to integrated services.

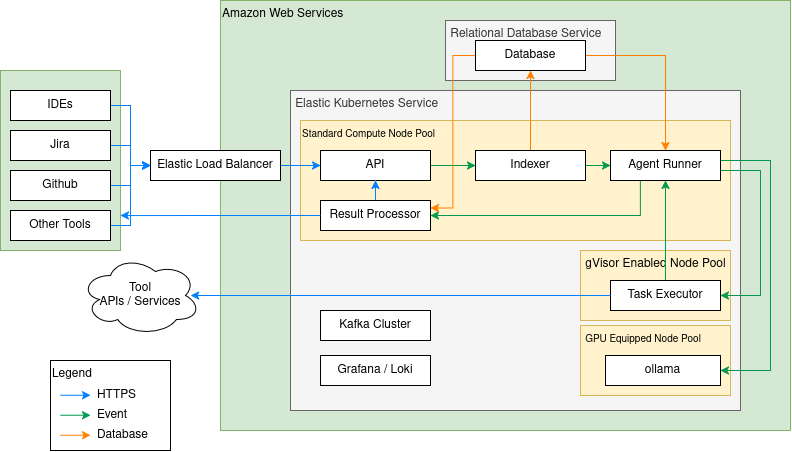

System Dependencies

- The solution sits on top of

Amazon Web Servicesas an industry standard compute provider. We intentionally will not use AWS products that do not have good analogs with other compute providers (e.g. Kinesis, Nova, Bedrock, etc) to avoid vendor lock in. - The solution is built and deployed on top of

Elastic Kubernetes Serviceto provide a flexible orchestration layer that will allow us to deploy, scale, monitor, and repair the application with relatively low effort. Updates with EKS can be orchestrated such that they are delivered without downtime to consumers. - For asynchronous event flows we'll use

Apache Kafkathis will allow us to handle a very large event volume with low performance overhead. Events can be processed as cluster capacity allows and events will not be dropped in the event of an application availability issue. - For observability, we'll use

PrometheusandGrafanain the application to provide metrics. For logs we'll useGrafana Loki. This will allow us to see how the application is performing as well as identify any issues as they arise. - To provide large language and embedding models we can host

ollamaon top of GPU equipped GKE nodes. Models can be distributed via persistent volumes and models can be pre-loaded into vRAM with an init container. Autoscalers can be used to scale up and down specific model versions based on demand. This doesn't preclude using LLM-as-a-service providers. - Persistent data storage will be done via

PostgreSQLhosted on top ofAmazon Relational Database Service. Thepgvectorextension will be used to provide efficient vector searches for searching embeddings.

Scaling Considerations

The system can dynamically scale based on load. A horizontal pod autoscaler can be used on each component of the system to allow the system to scale up or down based on the current load. For "compute" instances CPU utilization can be used to determine when to scale. For "gpu" instances an external metric measuring GPU utilization can be used to determine when scaling operations are appropriate. For efficient usage of GPU vRAM and load spreading, models can be packaged together such that they saturate most of the available vRAM, they can be scaled independently.

In order to accommodate load bursts the system will operate largely asynchronously. Boundaries between system components will be buffered with Kafka to allow the components to only consume what they're able to handle without data getting lost or the need for complex retry mechanisms. If the number of models gets large a proxy could be developed that can dynamically route requests to the appropriate backend with the appropriate model pre-loaded.

Testing / Model Migration Strategy

An essential property of any AI based system is the ability to measure the performance of the system over time. This is important to ensure that models can be safely migrated as the market evolves and better models are released.

A simple approach to measure performance over time is to create a representative set of example tasks that should be run when changes are introduced. Performance should be measured against the baseline on a number of different metrics such as:

- Number of agentic iterations required to solve the task (less is better).

- Amount of code / responses generated (less is better).

- Success rate (more is better).

Over time, as problematic areas are identified, new tasks should be introduced to the set to improve the training data.

Migrating / Managing Embeddings

One particularly sensitive area for migrating models is around embeddings models. Better models are routinely published but, it is expensive to re-index data, especially if the volumes are large.

The vector database should store the model that produced each embedding. When new embedding models are introduced the indexer should use the new embedding model to index new content, but should allow old content to be searched using old models. If models are permanently retired the content should be re-indexed with a supported embeddings model. The vector database should allow the same content to be indexed with multiple models at the same time.